How to Calibrate Sensors for Autonomous Vehicles with speed and precision

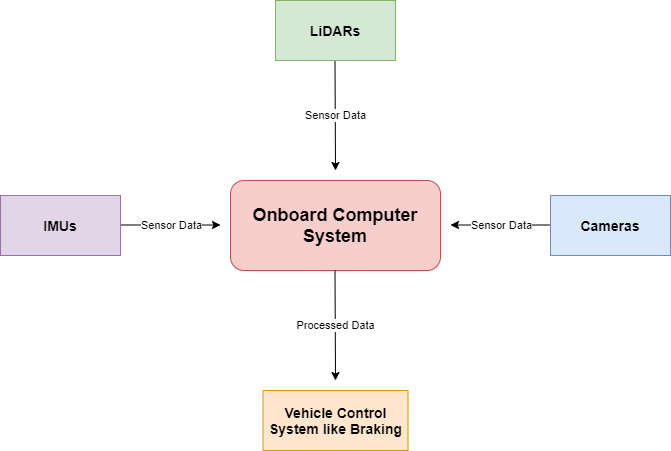

Autonomous Vehicles (AVs) come equipped with a suite of sensors. Some of those sensors include LiDARs, Inertial Measurement Units (IMUs), Cameras, Radars & so on. And they're placed on various strategic sections of the vehicle like the roof or on the dashboard facing the road. Acting as the eyes & ears, these sensors collect spatial information around the vehicle. The gathered spatial info is then processed by the AV’s on-board computer system. The same system then performs a variety of post-processing "tasks" which in turn enables the vehicle to move around.

To get a generic idea of what the above mentioned tasks are, we've listed some of them as follows:

- Trying to make sense of what a certain region of the world around the vehicle looks like (perception).

- Trying to predict what changes will happen in the next few seconds (prediction), ...etc.

- Performing accurate actions like braking at an interception & such. These actions require precision & any mistakes here could've dire consequences.

That said, through this article we attempt to shed light on the technical aspect of AVs. Among the other stuff we'll get into are the interactions between the sensors & its onboard system real-world object perception & its importance since that's what makes the vehicle truly "autonomous".

For precise object identification, the sensors are placed on strategic locations of the vehicle. But their placement makes them very susceptible to the elements of nature (temperature, pressure, wind, ...etc). And not to forget constant mechanical fatigue & vibration created by the vehicle itself. Suffice to say, all the external stress factors reduce the fidelity of the sensors over time. Hence, certain pre-processing steps need to be taken for ensuring the sensors are functioning at their highest fidelity. These steps include routine maintenance (like clearing of the dust off of the camera lens) & calibration. And without the sensors performing optimally, the AV might dysfunction which could be disastrous in the worst-case scenario.

Considering the current state of tech, routine calibration & maintenance tasks for AV sensors are a laborious procedure. But on a brighter note, at Deepen we provide the right set of tools to simplify that process for you. Through the article we also showcase how Deepen helps with most of the heavy lifting with ease & precision. And finally, towards the end of the article we also share Deepen’s Calibration Suite of products with you. By doing so, we hope it can help you out while calibrating the sensors for your fleet of AVs more easily & precisely.

Why Do AVs Need Onboard Sensors?

As mentioned earlier, the onboard sensors collect data (or rather parameters) of the real-world around the vehicle. The collected data is then processed so that the AV can;

- Perceive objects surrounding the vehicle to manoeuvre around them with utmost safety & precision.

- Map static objects of the world like street signs, pavements & such to keep the AV on the right track.

- Compute the real-world coordinates of the AV. This information is then used to process the directions, velocity & other related aspects.

- And finally, control the vehicle’s manoeuvres based on inputs from the first three tasks.

The diagram below portrays how the suite of sensors & the onboard computer system interacts with each other thus giving the vehicle it’s autonomous features.

AVs perform the above tasks to follow the rules-of-the-road & to not endanger the pedestrians or other vehicles around it either. So, as you can see the onboard sensors serve a very crucial role in proper functioning of the AV. If left uncalibrated, it could hamper the core functionalities of the vehicle. And in the worst-case scenario, the consequences of an AV dysfunctioning could be unimaginably dire.

Suffice to say, sensor perception is expected to maintain a high degree of accuracy to perceive the real-world agents. To understand that better, let's take an example of a real-world scenario of how sensors gather data from the real-world.

Assuming we deployed an AV for trials, our trial AV like any other vehicles on the street have to identify the traffic lights and/or an agent with precision. The said agent could be a pedestrian crossing the street or another vehicle in front of them. On top of it, in certain cases, the AV also has to maintain a safe distance from the agent. Failing to identify the agent with precision, could mean trial AV hurting the agent(s) in & around the vehicle. What would be the consequences if a fleet of such AVs were dysfunctional, is best if left to one's imagination only.

Besides perceiving & predicting the actions of the real-world agents, the AV also requires identifying its route and/or path way. It requires mapping the objects of the world around the AV & is also an important task at hand. Doing so, helps the AV to stay on track by mapping the edges of the street & street signs. Failing to do so or even some minor imperfection in this regard could be disastrous. Meaning, the AV could perhaps drive over a pavement & onto an unsuspecting pedestrian or something even worse.

Identifying the objects of the world & safely manoeuvring around them are only half of the task at hand for an AV. The vehicles should also be able to identify its current precise location on the street. In such a context, an uncalibrated sensor might signal to the AV that it’s on the right side of the road. But it’s true position could be on the oncoming lane!

As you can see, uncalibrated sensor(s) on AVs are a ticking time-bomb, ready to cause havoc without a moment’s notice. But making the sensors aware of its surroundings is no straightforward task. And to resolve these concerns, AV sensors are programmed to look for specific data points.

At Deepen, we developed our suite of software to help you reduce these exact concerns. Our tool helps you calculate the parameters of the sensors & the error metrics to adjust for a precise sensor calibration. Thus, while using our tools, you need not worry about dysfunctional AVs on the street due to uncalibrated sensors. And as such you can get a good night’s sleep as well.

More about our products soon enough, but before that, let's take a deeper look into what sort of data the AV sensors collect. In the next section, we'll look into what those data points are. And also how they're categorized into specific "parameters".

How Do the Onboard Sensors Collect Real-World Data

We mentioned in brief how the sensors collect spatial data of the world in real-time earlier. The gathered data is then processed by an onboard computer system. And this system in turn acts as a "control switch" giving the vehicle it’s autonomous features. The onboard systems are also configured to accept a set of parameters beforehand. And hence they need specific types of sensors as well. In other words, the cameras & LiDARs are meant to perceive the objects & agents around the vehicle. While the IMUs, accelerometers & so on measure the velocity of the vehicle.

So as you can see, each specific type of such sensor collects a different category of parameters. These parameters are then delivered to the system for post-processing.

That said, the data points are categorized based on its source. As such, the categories are named as the following parameters:

- Intrinsic parameters - These are the internal parameters of the sensors. Examples include, mapping the pixel coordinate in the image frame taken by a camera to the onboard coordinate of the camera. Or the cloud points created by the LiDAR sensor. Most of these parameters are also static & internally hard-coded by the manufacturer according to the type of sensor.

- Extrinsic parameters - These are the external parameters of the sensors. Examples include the location & orientation of the sensor. It depends on the sensor type & use case. Unlike the intrinsic parameters they’re not static. And the data points are also subject to change according to external dynamic factors.

- Temporal parameters - These are the computed parameters & are used to sync timestamps from different sensors onboard the AV.

At Deepen, our provided set of tools were meant to make the technician’s and/or the engineer’s life much easier. The tools do so by delegating the heavy lifting of computing calibration parameters & the error metrics to our software instead.

Often the sensor manufacturers don't provide the intrinsic parameters. And in such situations our tools help consider the state of the vehicle & will attempt to calibrate the sensors. In doing so, each sensor receives a set of parameters unique to that specific sensor itself. But the technician is still required to input the necessary data to the software through our intuitive Web UI. You can refer to the docs on “Camera Intrinsics Calibration” to get a gist of how easy it is to use our tools. The web app accepts user input as checkerboard images & computes the error metrics which can be used to calibrate the sensors further!

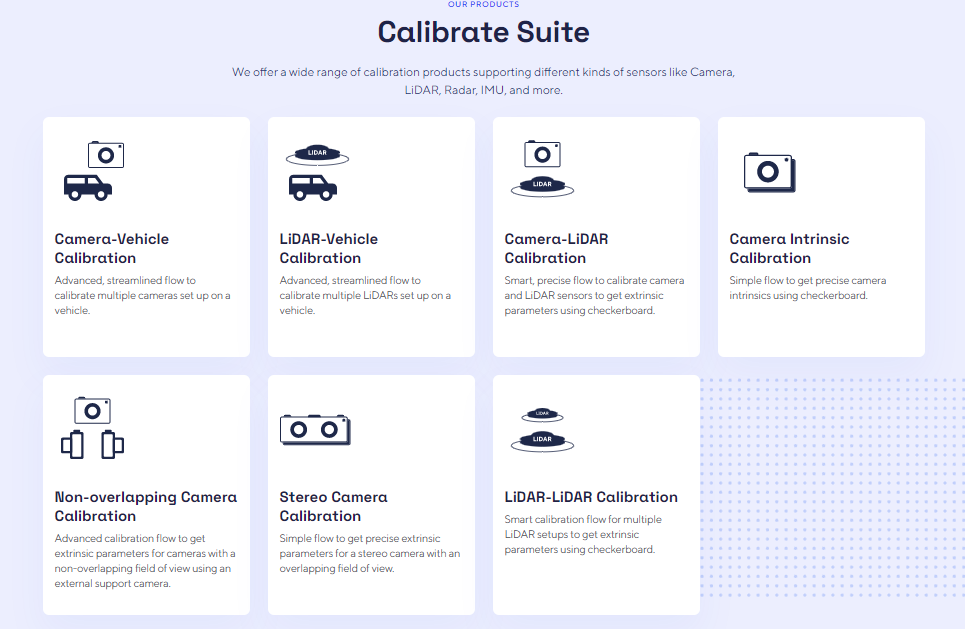

On a side note, besides Camera Intrinsics, our tools are capable of performing calibration for other set(s) of sensors as well. And you can find an image of some of the products we provide at Deepen below. More details on each of these services will be discussed briefly in a later section of the article.

While calibrating for Intrinsic Parameters can be difficult for some sensors, calibrating Extrinsic Parameters is a legitimate concern in the AV industry today. On a brighter note though, there are some bright minds working hard to resolve some of the issues in this context. So, there’s some hope in the near future to expect some innovative ways to calibrate the Extrinsic Parameters as well.

That said, since elements “outside the sensor” are dynamic in nature, extrinsic parameters aren’t as easy to calibrate. But based on existing research & best practices, at Deepen we developed a workflow to streamline that procedure as well. Hence, while using our software you can rest assured the sensors will be calibrated to its highest fidelity & that too with minimal errors.

Besides Intrinsic & Extrinsic Parameters, calibrating Temporal Parameters requires all the onboard sensors to be well calibrated. Since each type of sensor operates at differing frequencies & accounting for processing delays as well, the data gathered mightn’t always be synced. As such the Temporal Parameters are calculated by considering the time delays of the sensors. These computations can get quite complex, & it’s not recommended to calculate them manually either. Hence, Deepen is working on providing tools to simplify these procedures as well. .

AV fleet maintainers should also note that current industry standards mandates obtaining all three parameters for sensor calibration. This practice ensures all the sensors are functioning at optimal fidelity & safety hasn’t been compromised with either.

And if you use Deepen’s suite of Calibration software, manual calibration of your software needn’t be a laborious & time-consuming procedure anymore. Our tools take care of most of the computational heavy lifting so that you can focus more on the important tasks at hand.

The later section of the article delves deeper into how Deepen makes the most out of standard industry practices to provide you with the best calibration products in the market. So without further adieu, let’s take a look into the approaches we take to calibrate a wide range of AV sensors for the Extrinsic Parameters.

Approaches for Calibrating the Sensors

The previous sections showcased why AV sensors need frequent calibration. We also glanced over the categories of parameters the sensors collect for post-processing. And we also shed light on how extrinsic parameters reflect real-world data-points & their dynamic nature. Considering such constraints, our tools were developed to delegate the computational heavy-lifting away from the technician. Our software is modelled upon tried-and-tested approaches, so you can reliably use our tools to calibrate your AV fleets.

That said, let’s take a look at some of the approaches developed to calibrate sensor(s) for the Extrinsic Parameters.

Following are some of the most well developed techniques currently in industrial use:

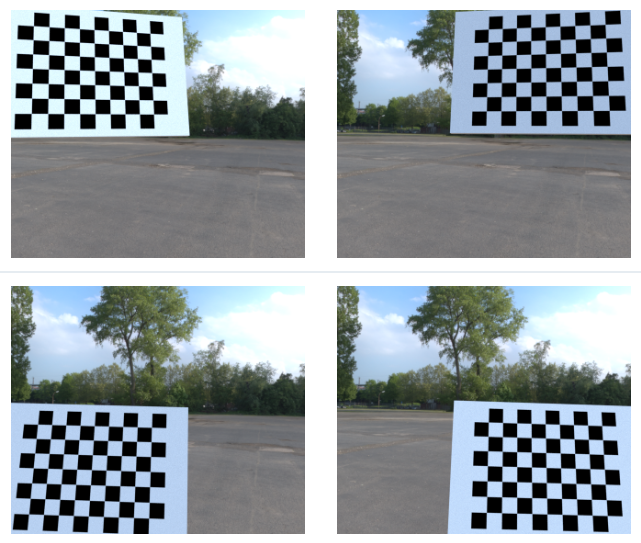

- Target-based, wherein targets are set up around the vehicle at specific locations for the sensors to track. The said targets are often in the shape of a black & white checkerboard. The different frames & locations of the checkerboard are then processed. Doing this helps in recalibrating the sensor.

- Targetless Motion-based, wherein the sensors calibrate their extrinsic parameters by computing the trajectories based on it’s local coordinates. This type of calibration is more widely used in context to drones & small robotics technology. But the techniques can be employed to calibrate sensors for AVs as well these days. And at Deepen, we use these techniques to calibrate IMUs.

- Targetless Appearance-based techniques, though, appear to bring about the most promising results. This technique makes use of observing the intensity distribution captured in a frame. Or in some cases the appearance of an object in a frame. Thus, appearance-based calibration techniques do seem practical when used in context to AVs. Also, LiDAR sensors are improving by the day & they’re becoming more readily available. As such at Deepen, we use these techniques to calibrate AV setups with Multi-LiDAR equipment. We’re still working towards providing more integrated tools using this technique so do keep an eye out for any such developments!

And as mentioned earlier, Deepen always provides the right set of tools to perform specific sensor calibration tasks. The tools we provide at Deepen are modelled on the “target-based” & “targetless” approaches as well. And as the R&D in this sector brings about more innovative approaches for calibrating sensors, we’ll also provide them to you right away. So you might want to keep an eye out for that as well.

With that said, till now we detailed quite a lot of core concepts about sensor calibration in the article. The next section will detail some, if not all of the Deepen suite of Sensor Calibration products. Along with it you’ll also learn more about why we developed a specific product & the specific pain points the product will help you resolve.

How Can Deepen Help You Out

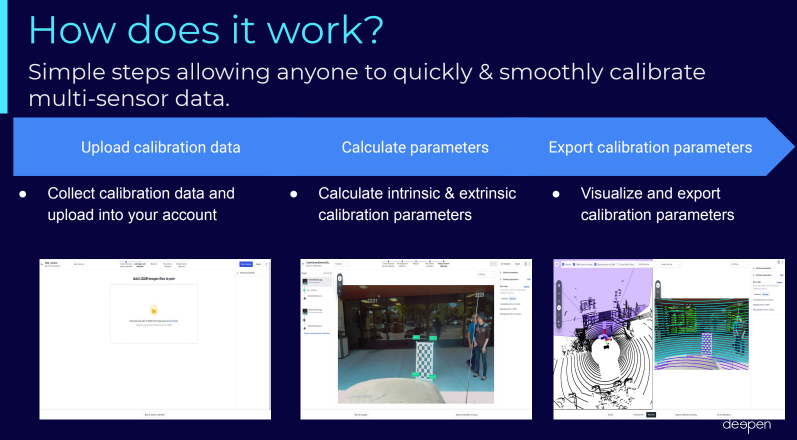

As you might already be aware by now, at Deepen, we're committed towards providing the best & most efficient set of tools for your AV’s sensor calibration needs. We believe calibrating AV sensors should be accomplished in as efficient a manner as possible. Hence, with our products it only takes a mere three steps to start calibrating the sensors. The presentation below is the best representation of an actual workflow while using our product(s).

All of our Calibration Suite of products follows the following workflow:

- Collecting the necessary data through a wide variety of sensors, be it a single camera or a single LiDAR or perhaps, some combination of both and/or stereo-positioned sensors. The data is collected through checkerboard targets, see the image below for reference.

- Using the latest Machine Learning & Computer Vision algorithms, the checkerboard coverage is calculated. Calibrate necessary sensors based on the error metrics computed by the web app..

- Understanding, debugging & visualising calibration mistakes through an intuitive user friendly web UI & workflow. The calibration mistakes are also quantified through error metrics. So, lesser the error metric value, the better for sensor calibration. And Deepen provides you with an intuitive web UI to take care of all these tasks as well.

- And at last, our products also ensure the whole procedure is tested for high fidelity with a robust integrated QA system & sophisticated algorithms optimized for accuracy. Best of all, the UI/UX of our software were developed keeping in mind the capabilities of non-technicians. So, in the absence of a senior-engineer even non-expert can use Deepen’s Suite of Calibration products.

So, as you can see, our Deepen’s Suite of Calibration products are packed with useful features & more are yet to come. We understand the importance of calibrating sensors to ensure safety of everyone on the roads more than anything else. As such we built our tools with the intention to reduce human error while calibrating the parameters as accurately as possible. With Deepen, your technician’s time could be put to more beneficial use elsewhere, say perhaps in R&D. And take pride in stating that our products will help you achieve calibration tasks with utmost ease & efficiency without compromising safety at all.

That said, we realise the world is progressing fast towards a distant future where human-driven vehicles could become a thing of the past. And to ensure in such a future, AVs are safe to use, we need to understand & adapt to the current limitations of the AVs today. So, at Deepen we’re continuously striving towards developing the best calibration tools to make AVs safer than ever. So, if the brief intro of our suite of sensor calibration products piqued your interests, then do reach out to us & we’ll be glad to help you out.